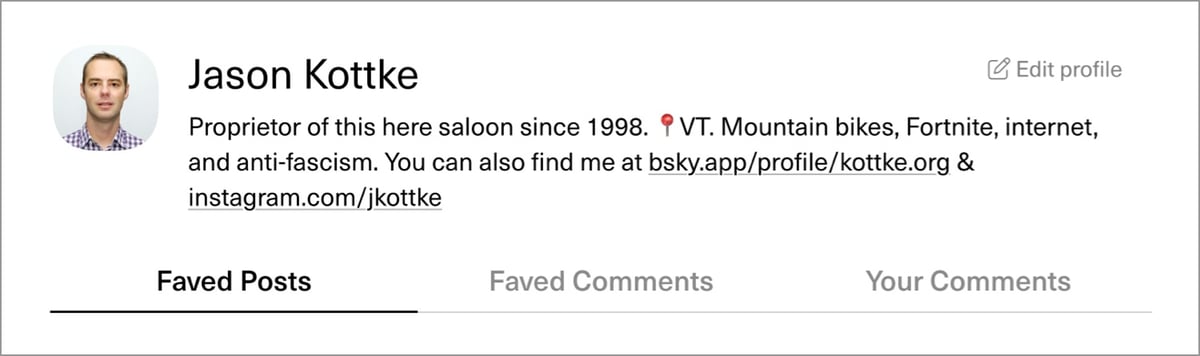

Can’t stop, won’t stop. On the heels of the refreshed Rolodex from earlier in the week, I’ve pushed another “Just Enough Social” feature to the site: members bios & profile pics. Here’s what that looks like:

Members can find a link to their profile by 1) clicking on your name in the menu in the upper righthand corner of the site (or under the hamburger menu on mobile); 2) clicking on the “edit profile” link by your name at the bottom of any post with active comments; or 3) clicking on your name or profile pic in any comment thread. You can change your username, provide a short bio (300 character limit, up to 2 URLs), and upload a profile pic (jpg, png, webp). Check the community guidelines for more advice/info.

The idea with this feature is to provide a lightweight way for KDO members to get to know who they’re conversing with in the comments without having to share that information with the entire internet (in the form of a full-blown social media profile). As a member, you’re in control of what you share in your bio and selecting a profile pic. So here’s how it works right now (i.e. who can see what and where):

- Your member profile pic & display name are fully public…they’re shown next to comments you’ve made on the site (which are also fully public). Profile pics are optional. Display names can be changed from your full name used in your Memberful account — you don’t even need to use your real name (again, see the the community guidelines for more info on this).

- Your bio can only be viewed by other members with active memberships. As a member, you can view another member’s bio by hovering over their name or profile pic in a comment thread or in the comment lists on your profile page. Bios are not public on the internet.

- Your profile page can only be viewed by you. Other members cannot see the posts or comments you’ve faved or the list of comments you’ve made. They also cannot see your email address, your real name (only your display name), membership level, the date you joined, whether you’ll renew, or your member renewal date.

- Inactive members can modify their bios & profile pics, see their own profile pages (with faves & comments), but can’t see other members’ bios.

This level of detail about something that’s existed on the internet since the dawn of time (message board profiles, essentially) might seem tedious, but I’m being clear and straightforward about how this works because I want people to feel comfortable connecting with each other here as much or little as each person wants. Many of you will probably share things like your personal website, job, hobbies, or social media accounts in your bios. Put your Signal handle or email address in there if you want. Gregarious types: put your phone number in your profile if you feel comfortable with that (not recommending that tbh). Or you can be super private or deliberately vague — on KDO, no one knows you’re a dog. Ditto for the profile pic: anything from your headshot to a pet photo of your pet to a Mark Rothko abstract goes — totally up to you.

The comments, the Rolodex, and now member profiles all operate under the same principle: Just Enough Social. Facebook, Instagram, and TikTok are too overwhelming and stand-alone blogs (like KDO circa 2 years ago) don’t offer much in the way of community. I’m vectoring toward the lightweight Baby Bear option of getting readers talking with each other in the easiest possible way & exploring the larger web community that KDO is a part of. There’s more work to do, but I’m happy with the direction it’s going.

One last thing before I go. I hope this goes without saying with this fine crew but I will say it anyway: if you are going to reach out to someone using the info in their KDO profile/bio, do not be a dick. Someone putting their website address or email in their bio is not an invitation for inappropriate behavior or taking a disagreement outside the bounds of the community guidelines. Enough said about that, I hope.

Ok, I’ll let you go freshen up your profile if you’d like. Lemme know if you have any feedback, questions, concerns, or even attaboys.

Tags: kottke.org